Alternative Architecture for Exchange On-Premises (Virtualization)

Andrew Higginbotham

In my previous article in this series, we discussed Exchange “Alternative Architecture” options for medium-to-large businesses. We specifically covered common storage design options and which were ideal to design the best solution for a customer that has decided to remain on-premises and chosen to not follow the Preferred Architecture. To reiterate, I’m a big fan of Office 365 and the Preferred Architecture but I understand many customers will not follow either of these two routes. Therefore, if they deviate from either of these options they should at least follow the recommended guidance that can increase the uptime and better the performance of their solution.

With that said, we’ll now cover a commonly encountered technology in Exchange deployments; virtualization. Many Exchange support escalations I’ve worked have centered around architectural mistakes which could’ve been avoided by adherence to supportability guidelines and best practices. With that in mind, let’s discuss a core principle around Exchange virtual architecture.

This Exchange 2013 Virtualization Best Practices guide from Microsoft is still accurate for Exchange 2016 and should be closely followed. Yet if I were to pick a singular deviation from best practice which harms Exchange deployments most often, it would be CPU sizing. I previously covered this topic in my “CPU Contention and Exchange Virtual Machines” ENow post but I’d like to expand upon what CPU over commitment really means and how it can affect design decisions.

Let’s attempt to define some virtualization terminology in a simplistic manner using a specific Intel processor as an example:

CPU Socket = Physical connector on a motherboard where a physical CPU chip is connected. To be precise, it is the mechanical component that allows the electrical connections between a microprocessor and a printed circuit board. However, you’ll commonly see the term CPU Socket used colloquially to refer to the physical chip itself. For instance, if a server has two Intel i7-7920HQ processors, it may be referred to as a “two socket system.”

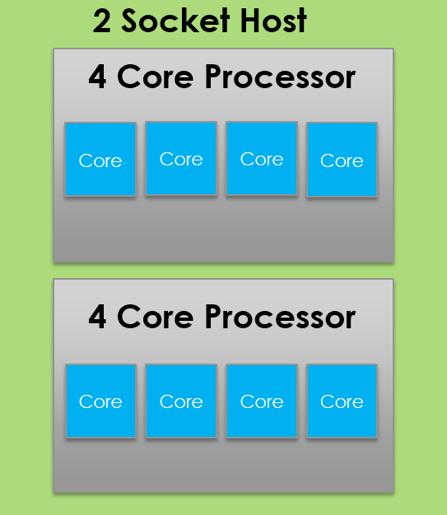

CPU Core = Individual processing unit. Most modern CPU chips have multiple cores, or “Physical CPU Cores.” Using the same Intel i7-7920HQ as an example, each Intel CPU chip has 4 physical CPU cores. So in this two socket system, there would be 8 physical CPU cores.

Logical Processor = When using Hyper-Threading (HT), each physical CPU core can have two threads. When HT is enabled in the BIOS, the operating system will see twice the number of CPU cores. With the same two socket Intel i7-7920HQ system as an example, while the system will still have 8 physical CPU cores, the operating system will detect 16 “Logical Processors.” As far as the operating system is concerned, it sees 16 physical CPU cores, but they are in fact Logical Processors. There is a myth that enabling HT truly delivers twice the processing power when in fact it does not. This becomes important when performing capacity/performance planning in virtual environments.

Virtual CPU = As the name implies, when using virtualization, a Virtual CPU or vCPU is a virtual CPU core which is assigned to a particular virtual machine. A vCPU can be treated as a virtual representation of a physical CPU core on the host. With our same two socket Intel i7-7920HQ example system with Hyper-threading enabled, it will have 8 physical CPU cores, 16 logical processors presented to the Hyper-V host, and you can assign up to 16 vCPUs to each virtual machine on that host. If Hyper-Threading were not enabled, you would only be able to assign 8 vCPUs to each virtual machine on that host. I make a point to emphasize “each” virtual machine because with virtualization, you can overcommit CPU cores; it’s one of the many benefits of virtualization. Unlike memory, of which only one piece of data can occupy a particular memory address at one time, you can share physical CPU cores across many virtual machines.

Note: Socket, Core, Logical Processor, and vCPU terminology may change between hypervisor vendors, but for the purposes of this blog post, a single vCPU is synonymous with a single virtual core.

Let’s get a visual representation of this. In the below image, you have our 2 socket Hyper-V host with 8 physical CPU cores.

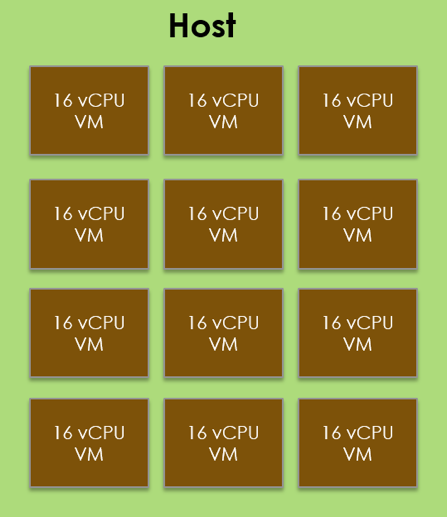

Now let’s say we enable Hyper-Threading in the BIOS, causing the Windows Operating system to detect 16 logical processors. To a Windows Hyper-V host, the maximum number of assignable vCPUs to a virtual machine is equal to the Logical Processors detected. For instance, in the below image we have 16 virtual machines on this host, each with 16 vCPUs assigned.

This brings the total number of assigned vCPUs on this host to 256. Remember, we have 16 logical processors on the host due to Hyper-threading, but we only truly have the processing power of the 8 physical CPU cores. So we’re left in a situation where to each of these virtual machines, they believe they have access to the processing power of 16 physical CPU cores when in fact they’re all sharing 8 physical CPU cores. 256 vCPUs to 8 physical CPU cores. As a ratio, this reduces down to 32:1. This doesn’t even factor in the processing power required for the hypervisor OS itself. This ratio becomes extremely important to the Microsoft support stance for virtualized Exchange servers.

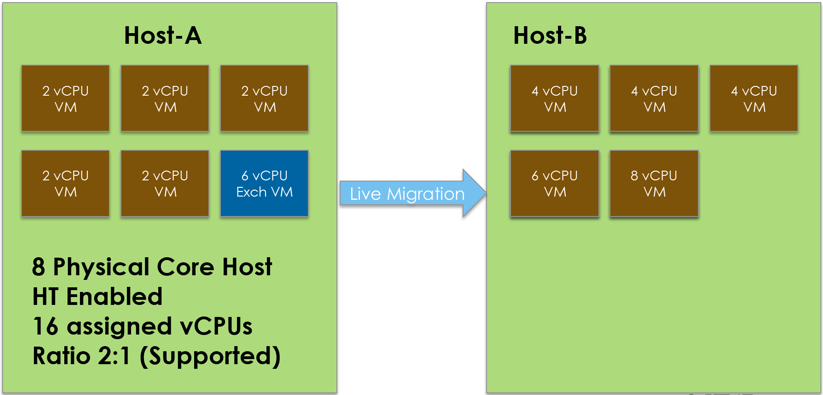

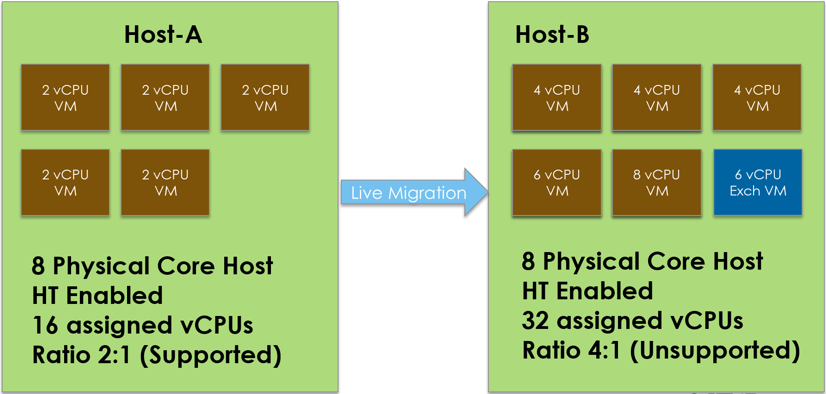

Microsoft states that for virtualized Exchange servers, the assigned vCPU to Physical CPU core ratio on a hypervisor host system can be no greater than 2:1 (Reference). While hypervisors are designed to efficiently share physical CPU resources across all assigned vCPUs, this still comes at a cost; time and latency. CPU overcommitment is acceptable and often expected in virtual environments, but it largely depends on the workload and what the vendor chooses to support. For instance, Desktop Virtualization (VDI) solutions can often function perfectly fine with a ratio as high as 24:1. After all, a Windows 10 client machine has very different latency requirements than an Exchange database. Therefore, the Exchange Product Team chooses not to support virtualized Exchange when the vCPU-to-Physical CPU Core ratio exceeds 2:1. They actually recommend 1:1 for best performance, but 2:1 is supported.

Note: It’s important to remember that I’m saying vCPU-to-Physical CPU Core ratios. This means logical processors do not matter, only physical CPU Cores. Therefore, even if you have Hyper-Threading enabled, you should size as though it is not.

Now how does this translate to Exchange architecture design decisions? Well you should obviously ensue you do not exceed the 2:1 ratio if you want Microsoft to support the solution. However, what things can you do to ensure your design doesn’t change? How might a supported solution become an unsupported one?

In the below scenario, Host-A is in a supported state (as far as Exchange is concerned). But what happens when a hapless Jr virtualization admin live migrates the Exchange VM to Host-B?

Simply by moving a virtual machine from one host to another, a supported solution becomes an unsupported one. For examples of how this can actually impact the performance of Exchange servers, I discuss this in my previous ENow post titled CPU Contention and Exchange Virtual Machines. So architecturally speaking, this means ensuring your virtual environment makes good use of affinity, resource scheduling, and monitoring features to help ensure that no production Exchange virtual machine can be moved in such a way that places it into an unsupported state. Similarly, ensuring the movement of other virtual workloads do not affect the supportability and performance of Exchange.

For additional information regarding Exchange virtualization, NUMA node boundaries, and other Exchange sizing guidance, see this blog series from a VMware design and performance expert.

Andrew Higginbotham

I work for Dell as a Principal Engineer in the Global Support & Deployment organization; serving as a senior point of escalation for Microsoft technologies to Dell's ProSupport & Consulting Services customers. My specialties are Exchange, Office 365, & Directory Services; while also being responsible for internal training development/delivery & managing various projects. I'm a Microsoft Certified Master in Exchange 2010 & a Microsoft Certified Solutions Master in Exchange 2013. I enjoy getting to work with a wide variety of customers, as it allows me to assist in resolving the various complexities & challenges they encounter. I try to use that experience to help others by blogging at Exchangemaster.wordpress.com with a few of my fellow MCM's at Dell. I also co-founded an Exchange Server community on Reddit (Reddit.com/r/ExchangeServer) where I answer questions on the product & help lead discussion around Exchange/Office 365. You'll also find me blogging about various other topics at ashdrewness.wordpress.com. I'm also the co-author of the Exchange Server Troubleshooting Companion (http://exchangeserverpro.com/ebooks/exchange-server-troubleshooting-companion/) I enjoy spending time with my lovely wife Lindsay, am a Texas Longhorns/Houston Texans fan, & try to sneak out onto a golf course every chance I get.

Related Posts

Exchange Server 2019 Virtualization

Thomas Stensitzki

The virtualized operation of Exchange Server has been a hot topic for discussion ever since the...